Continuing to drive responsible adoption of technology

Continuing to drive responsible adoption of technology

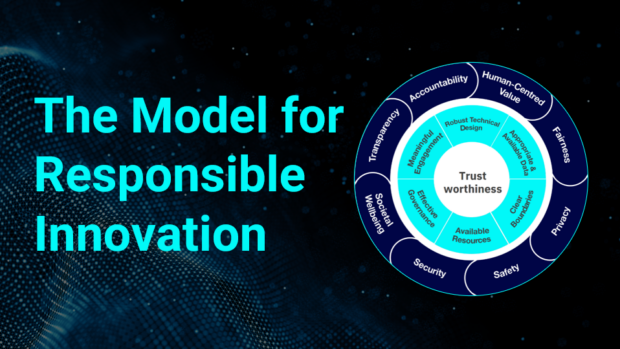

Since its establishment as the Centre for Data Ethics & Innovation in 2018, the Responsible Technology Adoption (RTA) Unit has provided a hub driving government’s work on responsible use of technology across both the public sector, and the broader economy, …

Recent Comments