Protecting Trained Models in Privacy-Preserving Federated Learning

Protecting Trained Models in Privacy-Preserving Federated Learning

Find out how you can protect individual's data after it's been used in training models through output privacy.

Find out how you can protect individual's data after it's been used in training models through output privacy.

Financial companies are increasingly using complex algorithms to make decisions regarding loans or insurance - algorithms that look for patterns in data which are associated with risks of default or high insurance claims. This raises risks of bias and discrimination …

The CDEI recently hosted a virtual roundtable with people from nearly 10 different countries who are working on contact tracing apps. The discussion focused on public trust and how it can be built while working at speed to develop and …

The CDEI’s mission is to ensure the UK maximises the benefits of data-driven technologies, that those benefits are fairly distributed across society, and to create the conditions for ethical innovation to thrive. Now, more than ever, this mission is paramount …

Recent reports suggest 9 out of 10 people are biased against women in some way. We wanted to mark International Women’s Day this year by talking about bias in a world of data-driven technology and artificial intelligence, and our forthcoming report on bias in algorithmic decision-making.

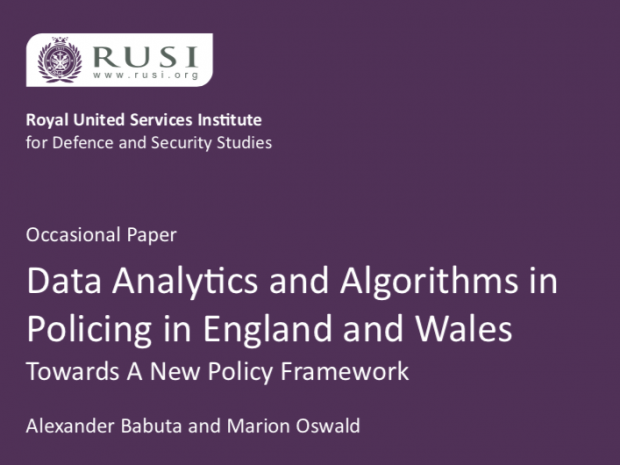

Who gets to decide where the boundary lies between national security concerns, and an individual's right to privacy? Are the right guidelines in place for policing's increasing use of technology and data? These were some of the issues discussed at this week's lecture hosted by RUSI.

It’s easy to argue for more data sharing in the public sector. This would enable more innovation, make it easier to deliver personalised services and make the government more efficient. Right? But what about privacy?

Advances in technical innovation should be something that everyone can look forward to. Or, at a minimum, not be something that causes active worry. Whilst innovation is to be encouraged, innovation alone is not good enough. It needs to be ethical innovation, or none at all.

If 2020 is going to be the year that we get serious about ethical principles, as many commentators in the AI and ethics community claim, I can think of few better places to begin than with personal insurance.

Recent Comments