Responsible innovation

Since its establishment as the Centre for Data Ethics & Innovation in 2018, the Responsible Technology Adoption (RTA) Unit has provided a hub driving government’s work on responsible use of technology across both the public sector, and the broader economy, …

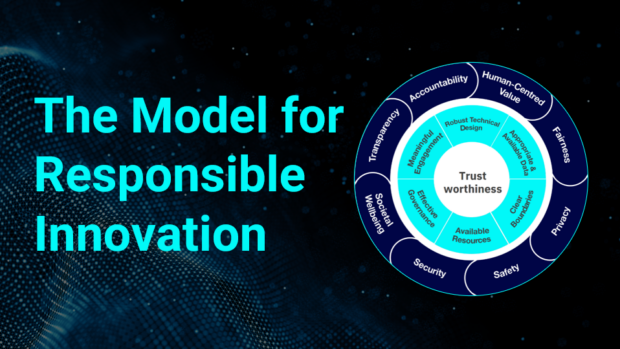

Last week, the Responsible Tech Adoption Unit (RTA) published our Model for Responsible Innovation, a practical framework for addressing the ethical risks associated with developing and deploying projects that use AI or data-driven technology. The Model sets out a vision …

Today, DSIT’s Responsible Technology Adoption Unit (RTA) is pleased to publish our guidance on Responsible AI in recruitment. This guidance aims to help organisations responsibly procure and deploy AI systems for use in recruitment processes. The guidance identifies key considerations …

This post is the first in a series on privacy-preserving federated learning. The series is a collaboration between CDEI and the US National Institute of Standards and Technology (NIST). Advances in machine learning and AI, fuelled by large-scale data availability …

The Centre for Data Ethics and Innovation leads the Government’s work to enable trustworthy innovation using data and artificial intelligence. At the CDEI, we help organisations across the public and private sectors to innovate, by developing tools to give organisations …

The UK government's recently published approach to AI regulation sets out a proportionate and adaptable framework that manages risk and enhances trust while also allowing innovation to flourish. The framework also highlights the critical role of tools for trustworthy AI, …

Building and using AI systems fairly can be challenging, but is hugely important if the potential benefits from better use of AI are to be achieved. Recognising this, the government's recent white paper “A pro-innovation approach to AI regulation” proposes …

I have long believed in the power of data to make a meaningful difference to people's lives. Working in the field for over 30 years, I have seen the ways in which data can be used as a powerful force …

Self-driving vehicles have the potential to radically transform the UK’s roads. But to enable their benefits and achieve the government’s ambition to ‘make the UK the best place in the world to deploy connected and automated vehicles’, developers and manufacturers …

In February 2020, we published our review of online targeting, which included a number of recommendations to government, regulators and industry, regarding how data is used to shape the online experience. In the final report, we recommended that online platforms …

Recent Comments